Alexander Rubin

Guest

In this blog post, I’ll review how the InnoDB history length can affect a hung MySQL transaction.

Recently I was helping a customer discover why SELECT queries were running slower and slower until the server restarts (which got things back to normal). It took some time to get from that symptom to a final diagnosis. Please follow me on the journey of chasing this strange MySQL behavior!

Symptoms

Changes in the query response time can mean tons of things. We can check everything from the query plan to the disk performance. However, fixing it with a restart is less common. After looking at “show engine innodb status”, I noticed some strange lines:

Trx read view will not see trx with id >= 41271309593, sees < 41268384363

---TRANSACTION 41271309586, ACTIVE 766132 sec

2 lock struct(s), heap size 376, 0 row lock(s), undo log entries 1

...

There were a total of 940 transactions like this.

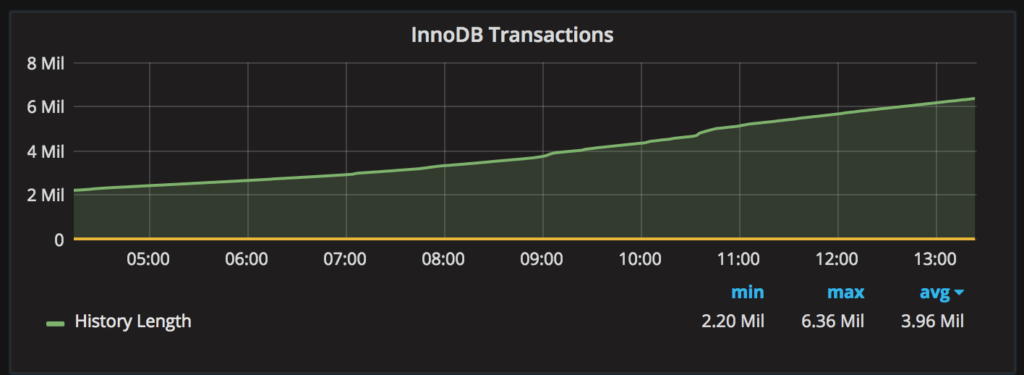

Another insight was the InnoDB transaction history length graph from Percona Monitoring and Management (PMM):

History length of 6 million and growing clearly indicates a problem.

Problem localized

There have been a number of blog posts describing a similar problem: Peter stated in a blog post: “InnoDB transaction history often hides dangerous ‘debt’“. As the InnoDB transaction history grows, SELECTs need to scan more and more previous versions of the rows, and performance suffers. That explains the issue: SELECT queries get slower and slower until restart. Peter also filed this bug: Major regression having many row versions.

But why does the InnoDB transaction history start growing? There are 940 transactions in this state: ACTIVE 766132 sec. MySQL’s process list shows those transactions in “Sleep” state. It turns out that those transactions were “lost” or “hung”. As we can also see, each of those transactions holds two lock structures and one undo record, so they are not committed and not rolled-back. They are sitting there doing nothing. In this case, with the default isolation level REPEATABLE-READ, InnoDB can’t purge the undo records (transaction history) for other transactions until these “hung” transactions are finished.

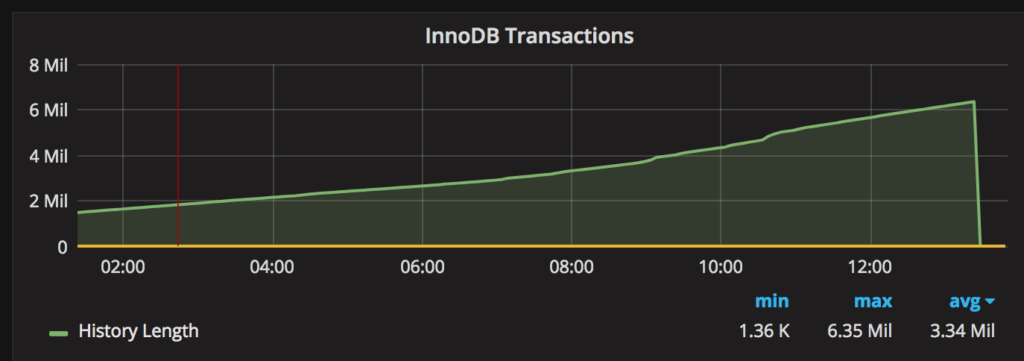

The quick solution is simple: kill those connections and InnoDB will roll back those transactions and purge transaction history. After killing those 940 transactions, the graph looked like this:

However, several questions remain:

- What are the queries inside of this lost transaction? Where are they coming from? The problem is that neither MySQL’s process list nor InnoDB’s status shows the queries for this transaction, as it is not running those queries right now (the process list is a snapshot of what is happening inside MySQL right at this moment)

- Can we fix it so that the “hung” transactions don’t affect other SELECT queries and don’t cause the growth of transaction history?

As it turns out, it is very easy to simulate this issue with sysbench.

Test preparation

To add some load, I’m using sysbench,16 threads (you can open less, it does not really matter here) and a script for a “write-only” load (running for 120 seconds):

conn=" --db-driver=mysql --mysql-host=localhost --mysql-user=user --mysql-password=password --mysql-db=sbtest "

sysbench --test=/usr/share/sysbench/tests/include/oltp_legacy/oltp.lua --mysql-table-engine=InnoDB --oltp-table-size=1000000 $conn prepare

sysbench --num-threads=16 --max-requests=0 --max-time=120 --test=/usr/share/sysbench/tests/include/oltp_legacy/oltp.lua --oltp-table-size=1000000 $conn

--oltp-test-mode=complex --oltp-point-selects=0 --oltp-simple-ranges=0 --oltp-sum-ranges=0

--oltp-order-ranges=0 --oltp-distinct-ranges=0 --oltp-index-updates=1 --oltp-non-index-updates=0 run

Simulate a “hung” transaction

While the above sysbench is running, open another connection to MySQL:

use test;

CREATE TABLE `a` (

`i` int(11) DEFAULT NULL

) ENGINE=InnoDB DEFAULT CHARSET=latin1;

insert into a values(1);

begin; insert into a values(1); select * from a;

Note: we will need to run the SELECT as a part of this transaction. Do not close the connection.

Watch the history

mysql> select name, count from information_schema.INNODB_METRICS where name like '%hist%';

+----------------------+-------+

| name | count |

+----------------------+-------+

| trx_rseg_history_len | 34324 |

+----------------------+-------+

1 row in set (0.00 sec)

mysql> select name, count from information_schema.INNODB_METRICS where name like '%hist%';

+----------------------+-------+

| name | count |

+----------------------+-------+

| trx_rseg_history_len | 36480 |

+----------------------+-------+

1 row in set (0.01 sec)

We can see it is growing. Now it is time to commit or rollback or even kill our original transaction:

mysql> rollback;

...

mysql> select name, count from information_schema.INNODB_METRICS where name like '%hist%';

+----------------------+-------+

| name | count |

+----------------------+-------+

| trx_rseg_history_len | 793 |

+----------------------+-------+

1 row in set (0.00 sec)

As we can see, it has purged the history length.

Finding the queries from the hung transactions

There are a number of options to find the queries from that “hung” transaction. In older MySQL versions, the only way is to enable the general log (or the slow query log). Starting with MySQL 5.6, we can use the Performance Schema. Here are the steps:

- Enable performance_schema if not enabled (it is disabled on RDS / Aurora by default).

- Enable events_statements_history:

mysql> update performance_schema.setup_consumers set ENABLED = 'YES' where NAME='events_statements_history';

Query OK, 1 row affected (0.00 sec)

Rows matched: 1 Changed: 1 Warnings: 0 - Run the query to find all transaction started 10 seconds ago (change the number of seconds to match your workload):

SELECT ps.id as processlist_id,

trx_started, trx_isolation_level,

esh.EVENT_ID,

esh.TIMER_WAIT,

esh.event_name as EVENT_NAME,

esh.sql_text as SQL,

esh.RETURNED_SQLSTATE, esh.MYSQL_ERRNO, esh.MESSAGE_TEXT, esh.ERRORS, esh.WARNINGS

FROM information_schema.innodb_trx trx

JOIN information_schema.processlist ps ON trx.trx_mysql_thread_id = ps.id

LEFT JOIN performance_schema.threads th ON th.processlist_id = trx.trx_mysql_thread_id

LEFT JOIN performance_schema.events_statements_history esh ON esh.thread_id = th.thread_id

WHERE trx.trx_started < CURRENT_TIME - INTERVAL 10 SECOND

AND ps.USER != 'SYSTEM_USER'

ORDER BY esh.EVENT_IDG

...

PROCESS ID: 1971

trx_started: 2017-05-03 17:36:47

trx_isolation_level: REPEATABLE READ

EVENT_ID: 79

TIMER_WAIT: 33767000

EVENT NAME: statement/sql/begin

SQL: begin

RETURNED_SQLSTATE: 00000

MYSQL_ERRNO: 0

MESSAGE_TEXT: NULL

ERRORS: 0

WARNINGS: 0

*************************** 9. row ***************************

PROCESS ID: 1971

trx_started: 2017-05-03 17:36:47

trx_isolation_level: REPEATABLE READ

EVENT_ID: 80

TIMER_WAIT: 2643082000

EVENT NAME: statement/sql/insert

SQL: insert into a values(1)

RETURNED_SQLSTATE: 00000

MYSQL_ERRNO: 0

MESSAGE_TEXT: NULL

ERRORS: 0

WARNINGS: 0

*************************** 10. row ***************************

PROCESS ID: 1971

trx_started: 2017-05-03 17:36:47

trx_isolation_level: REPEATABLE READ

EVENT_ID: 81

TIMER_WAIT: 140305000

EVENT NAME: statement/sql/select

SQL: select * from a

RETURNED_SQLSTATE: NULL

MYSQL_ERRNO: 0

MESSAGE_TEXT: NULL

ERRORS: 0

WARNINGS: 0

Now we can see the list of queries from the old transaction (the MySQL query used was taken with modifications from this blog post: Tracking MySQL query history in long running transactions).

At this point, we can chase this issue at the application level and find out why this transaction was not committed. The typical causes:

- There is a heavy, non-database-related process inside the application code. For example, the application starts a transaction to get a list of images for analysis and then starts an external application to process those images (machine learning or similar), which can take a very long time.

- The application got an uncaught exception and exited, but the connection to MySQL was not closed for some reason (i.e., returned to the connection pool).

We can also try to configure the timeouts on MySQL or the application so that the connections are closed after “N” minutes.

Changing the transaction isolation level to fix the InnoDB transaction history issue

Now that we know which transaction is holding up the purge process of InnoDB history, we can find this transaction and make changes so it will not “hang”. We can change the transaction isolation level from REPEATABLE READ (default) to READ COMMITTED. In READ COMMITTED, InnoDB does not need to maintain history length when other transactions have committed changes. (More details about different isolation methods and how they affect InnoDB transactions.) That will work in MySQL 5.6 and later. However this doesn’t work in Amazon Aurora (as of now): even with READ COMMITTED isolation level, the history length still grows.

Here is the list of MySQL versions where changing the isolation level fixes the issue

MySQL Version Transaction isolation InnoDB History Length

MySQL 5.6 repeatable read history is not purged until “hung” transaction finishes

MySQL 5.6 read committed (fixed) history is purged

Aurora repeatable read history is not purged until “hung” transaction finishes

Aurora read committed history is not purged until “hung” transaction finishes

Summary

Hung transactions can cause the InnoDB history length to grow and (surprisingly, on the first glance) affect the performance of other running select queries. We can use the performance schema to chase the “hung” transaction. Changing the MySQL transaction isolation level can potentially help.

Читать дальше...